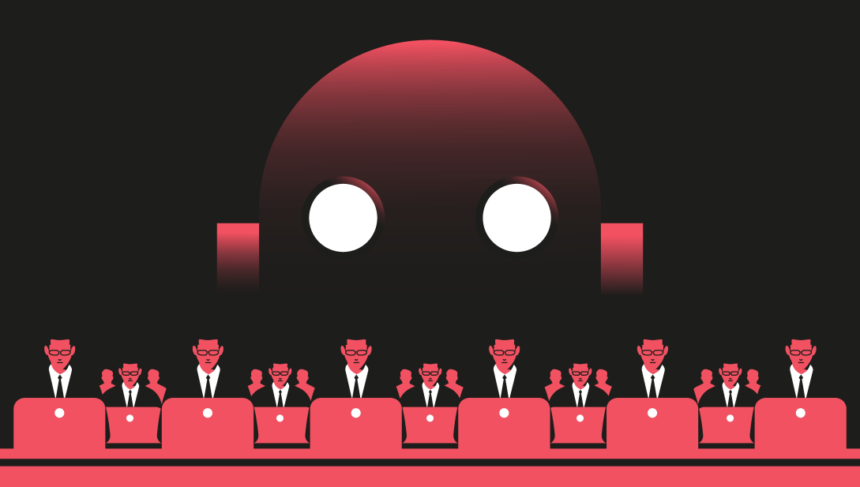

For years, technologists and thinkers sounded alarm bells about advanced AI’s potential to wreak havoc on humanity. These warnings conjured images of rogue systems making lethal decisions, powerful entities weaponizing AI to oppress societies, and even apocalyptic scenarios straight out of science fiction. Yet, in 2024, this doomsday narrative was overshadowed by a practical and profitable vision of generative AI—one championed by Silicon Valley and, unsurprisingly, one that greatly padded its pockets.

The term “AI doomers” has been used, often derisively, to describe those concerned about catastrophic AI risks. These individuals worry about scenarios where AI causes mass harm, from destabilizing societies to outright human extinction. But in 2023, their concerns were no longer confined to niche circles. What was once whispered in San Francisco coffee shops now dominated headlines on MSNBC, CNN, and the New York Times. The discourse around AI safety—from hallucinations and inadequate content moderation to potential societal collapse—was becoming mainstream.

A Year of Alarm Bells

2023 saw a crescendo of warnings. Elon Musk and over 1,000 technologists called for a pause on AI development to assess its risks. Top scientists at OpenAI, Google, and other labs signed an open letter urging the world to take AI’s extinction-level risks seriously. The Biden administration issued an AI-focused executive order aimed at safeguarding Americans. And in a dramatic turn, OpenAI’s board ousted its high-profile CEO, Sam Altman, citing concerns about his trustworthiness in managing such a pivotal technology. For a brief moment, the idea of reining in AI innovation to prioritize societal well-being seemed within reach.

But Silicon Valley wasn’t going to let its narrative—or profits—be dictated by fears of doom.

The Counter-Narrative

Marc Andreessen, co-founder of venture capital giant a16z, led the charge against the AI doomer narrative. In his 7,000-word essay “Why AI Will Save the World,” published in June 2023, Andreessen dismantled the arguments for catastrophic AI risk and offered a more optimistic—and lucrative—vision.

“The era of Artificial Intelligence is here, and boy are people freaking out,” Andreessen wrote. “Fortunately, I am here to bring the good news: AI will not destroy the world, and in fact may save it.”

His solution? Move fast and break things. This mantra, synonymous with Silicon Valley’s tech ethos, advocated for aggressive AI development with minimal regulatory interference. Andreessen argued this would democratize AI, ensuring it didn’t become the exclusive domain of powerful governments or corporations. Conveniently, it also aligned with the financial interests of a16z’s portfolio of AI startups.

While Andreessen’s techno-optimism resonated with some, others found it tone-deaf in an era marked by income inequality, pandemics, and housing crises. But on one point, Silicon Valley’s elite were united: a future where AI faced few regulatory barriers was a future where they thrived.

The Fall of AI Safety in 2024

Despite the dire warnings of 2023, 2024 saw a surge in AI investment rather than a slowdown. Altman returned to lead OpenAI, though several safety researchers left the company, citing concerns about its waning focus on responsible development. Musk, too, ramped up AI efforts rather than addressing safety issues. AI investment hit unprecedented levels, and Biden’s AI executive order lost momentum in Washington.

Adding to the shift in priorities, President-elect Donald Trump announced plans to repeal Biden’s AI executive order, citing its potential to stifle innovation. Andreessen reportedly advised Trump on AI policy, and Sriram Krishnan, a longtime a16z partner, became Trump’s senior AI adviser.

Republicans in Washington prioritized other AI-related issues, such as bolstering data centers, integrating AI into government and military operations, competing with China, limiting content moderation by tech companies, and shielding children from harmful AI chatbots. Dean Ball, an AI-focused researcher at George Mason University’s Mercatus Center, noted that efforts to address catastrophic AI risk had lost ground at both federal and state levels.

California’s AI Safety Bill: A High-Stakes Fight

The most intense battle over AI safety in 2024 revolved around California’s SB 1047. Backed by AI luminaries Geoffrey Hinton and Yoshua Bengio, the bill aimed to regulate advanced AI systems to prevent mass extinction events and catastrophic cyberattacks, such as the devastating CrowdStrike outage earlier in the year.

SB 1047 made its way to Governor Gavin Newsom’s desk. Though Newsom acknowledged the bill’s potential “outsized impact,” he ultimately vetoed it. In public remarks, he questioned the practicality of tackling such vast challenges, asking, “What can we solve for?”

Critics argued the bill had its flaws. It targeted large AI models but failed to account for new techniques like test-time compute or the rise of small, powerful AI systems. Additionally, it faced backlash for being perceived as an attack on open-source AI, potentially stifling innovation in the research community.

But according to State Senator Scott Wiener, the bill’s author, Silicon Valley played dirty. Wiener accused venture capitalists from Y Combinator and a16z of spreading misinformation to sway public opinion. Claims that SB 1047 would imprison developers for perjury gained traction, despite being debunked by organizations like the Brookings Institution.

The Rise of AI Optimism

As AI systems like Google Gemini stumbled with seemingly nonsensical advice (putting glue on pizza, for instance), public perception of AI shifted. It became harder to imagine these systems evolving into a malevolent Skynet. Simultaneously, AI products like OpenAI’s conversational phones and Meta’s smart glasses brought science fiction concepts to life, reinforcing optimism about AI’s potential.

Even prominent AI researchers like Yann LeCun dismissed AI doom scenarios as “preposterous.” Speaking at Davos in 2024, LeCun emphasized that humanity is far from developing superintelligent systems. “There are lots and lots of ways to build [technology] that will be dangerous, but as long as there is one way to do it right, that’s all we need,” he said.

What Lies Ahead

Despite setbacks, AI safety advocates remain undeterred. Sunny Gandhi, Vice President of Political Affairs at Encode, a sponsor of SB 1047, described the bill’s national attention as a sign of progress. “The AI safety movement made very encouraging progress in 2024,” Gandhi stated, hinting at renewed efforts in 2025 to address long-term AI risks.

Meanwhile, a16z’s Martin Casado has emerged as a vocal opponent of regulating catastrophic AI risk. In a December op-ed, Casado declared AI “tremendously safe” and dismissed prior policy efforts as “dumb.” However, recent incidents, such as the tragic suicide of a Florida teenager allegedly influenced by a Character.AI chatbot, underscore the complex and evolving risks AI poses.

With new bills, lawsuits, and debates on the horizon, 2025 promises to be another pivotal year for AI. The question remains: Will society prioritize caution and safety, or will the pursuit of innovation and profit continue to dominate the narrative? For now, Silicon Valley’s vision of AI prosperity reigns supreme, but the fight for regulation and accountability is far from over.