OpenAI is stepping up the game with a significant update to ChatGPT’s voice capabilities. On Tuesday, the company announced the rollout of Advanced Voice Mode (AVM) to a broader range of paying customers, beginning with those subscribed to the ChatGPT Plus and Teams tiers. This exciting new feature, designed to make conversations with ChatGPT feel more fluid and natural, will reach Enterprise and Edu customers as soon as next week.

What’s New with AVM?

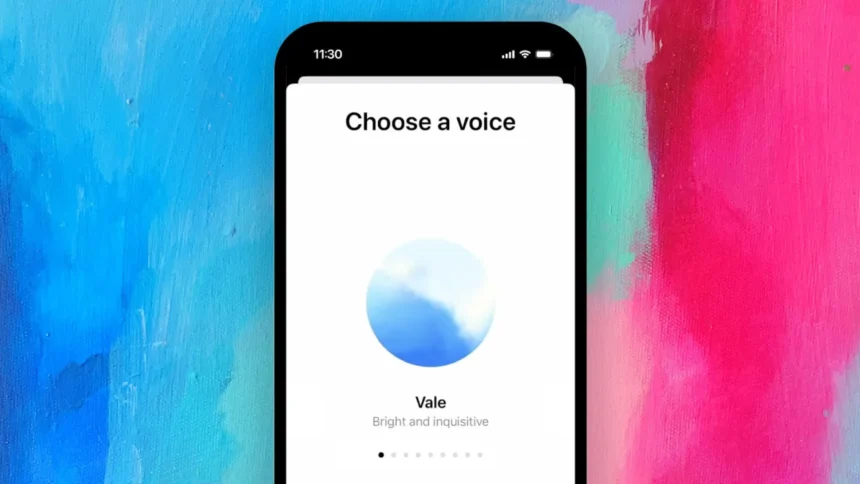

As part of the update, AVM is getting a polished, revamped design. Previously represented by animated black dots, the feature now sports a sleek blue animated sphere, offering a more modern and engaging look. This visual cue will appear within the ChatGPT app, guiding users when AVM is available to them. Once active, users will notice a more seamless interaction with the AI.

Advanced Voice Mode will be fully rolled out to all Plus and Teams users by the end of this week, marking a major milestone in the AI assistant’s evolution.

A Symphony of New Voices

ChatGPT’s voice offering is also expanding. Users can now explore five new voices—Arbor, Maple, Sol, Spruce, and Vale—bringing the total number of available voices to nine. These names, inspired by nature, reflect the intent behind AVM: to make AI interaction feel more organic, grounded, and human-like.

In addition to the new voices, OpenAI has retained the previously available ones: Breeze, Juniper, Cove, and Ember. Each voice adds a unique personality, allowing users to find a tone that best suits their preferences.

However, one notable voice is missing: Sky. Sky was introduced during OpenAI’s spring update but was swiftly removed after a legal dispute arose. Hollywood actress Scarlett Johansson claimed the voice sounded too similar to her own, referencing her role in the sci-fi film Her. Despite OpenAI’s stance that the voice was never intended to imitate Johansson’s, they opted to retire it to avoid further controversy.

Custom Instructions and Memory Now Supported

Another exciting development is the expansion of Custom Instructions and Memory to AVM. Custom Instructions allow users to tailor ChatGPT’s responses, giving them more control over the AI’s tone and style. Want the AI to offer concise summaries or ask follow-up questions? Custom Instructions make it happen.

Memory, on the other hand, empowers ChatGPT to remember previous conversations, making future interactions more contextually aware. Whether you’re discussing a project or simply need ChatGPT to recall preferences from past sessions, this new feature enhances personalization and long-term usability.

Improvements Under the Hood

While the flashy new voices and design updates are sure to catch the eye, OpenAI has also been working behind the scenes to improve AVM’s functionality. One notable improvement is its enhanced ability to understand and process a variety of accents, addressing a common pain point for users worldwide. The company has also worked to make voice-based conversations smoother and faster, reducing the lag and glitches that were occasionally observed during earlier tests.

In addition, ChatGPT can now perform one impressive new trick—it can say “Sorry I’m late” in over 50 languages! Whether you’re conversing in Spanish, Mandarin, Arabic, or another language, this feature highlights OpenAI’s dedication to creating a globally accessible experience.

What’s Missing?

Despite these exciting updates, there are still some notable omissions from this latest AVM release. Video and screen sharing features, which were teased during OpenAI’s spring update, remain absent. These multimodal capabilities, intended to enable ChatGPT to process both visual and auditory information in real time, were designed to take user interactions to the next level. During a demonstration earlier this year, an OpenAI staff member showcased how users could ask ChatGPT real-time questions about math problems written on a piece of paper or code displayed on their computer screen.

However, OpenAI has yet to provide an official timeline for when these multimodal features will be available for public use, leaving users eagerly awaiting further developments.

Availability and Rollout

As exciting as these features are, not all regions will have immediate access to AVM. OpenAI has confirmed that the feature is not yet available in several key markets, including the European Union, the United Kingdom, Switzerland, Iceland, Norway, and Liechtenstein. The company has not provided further details on when it plans to bring AVM to these regions.

For those fortunate enough to be included in this week’s rollout, a pop-up will appear within the ChatGPT app, next to the voice icon, when AVM is available.

A Step Toward More Natural Conversations

With this latest update, OpenAI is clearly positioning itself at the forefront of AI-driven voice technology. By enhancing both the technical underpinnings and the user experience, AVM promises to make interactions with ChatGPT more intuitive and enjoyable than ever.

OpenAI continues to push the boundaries of what AI can achieve, and as they refine AVM and work toward releasing multimodal features, the future of voice interaction with ChatGPT looks more promising than ever.

Stay tuned for more updates as OpenAI continues to evolve this groundbreaking technology.