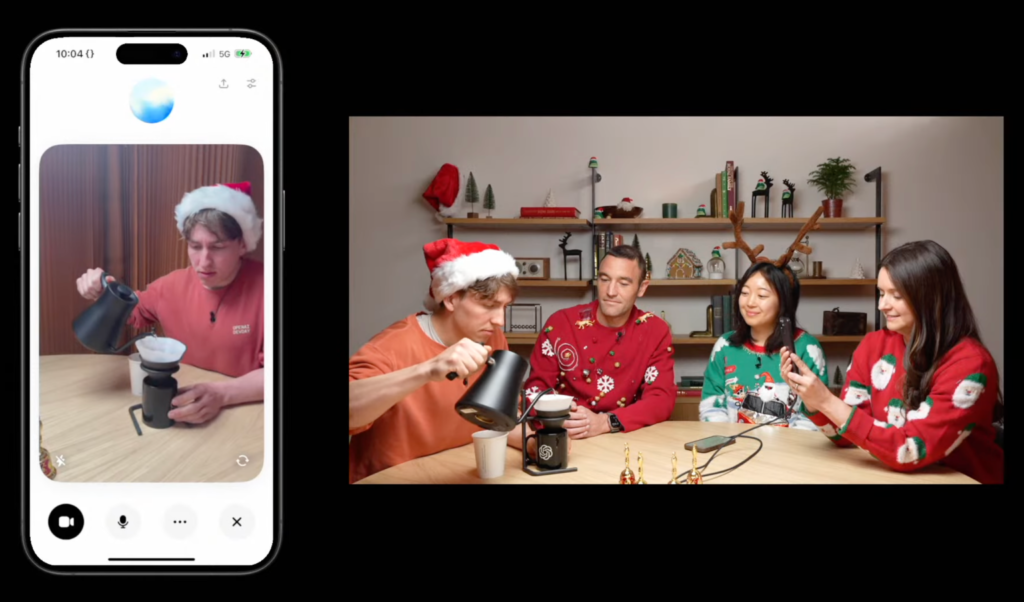

Nearly seven months after OpenAI first teased the feature, ChatGPT now supports real-time video analysis, marking a significant milestone in the evolution of AI-driven interactions. On Thursday, during a livestream, OpenAI unveiled this highly anticipated update, which integrates video capabilities into ChatGPT’s Advanced Voice Mode. Here’s how it works, who can access it, and what it means for the future of conversational AI.

Bringing Vision to Advanced Voice Mode

Advanced Voice Mode, already celebrated for its human-like conversational abilities, is now enhanced with visual understanding. Subscribers to ChatGPT Plus, Team, or Pro plans can use the ChatGPT app to point their phone cameras at objects and receive near real-time feedback. The feature expands the potential applications of ChatGPT, allowing it to interpret visual inputs as well as understand what’s happening on a device’s screen.

For instance, the app can help users decipher settings menus, solve math problems, or even explain the contents of an image. To enable this feature, users simply need to:

- Tap the voice icon next to the chat bar in the ChatGPT app.

- Select the video icon on the bottom left to activate video mode.

- For screen sharing, tap the three-dot menu and choose “Share Screen.”

This multimodal experience sets a new standard for AI interactivity by blending auditory, textual, and visual inputs.

A Staggered Rollout with Limitations

OpenAI plans to roll out Advanced Voice Mode with vision gradually, starting Thursday and completing the process within the next week. However, not all users will gain immediate access.

- Delayed for Enterprise and Education Plans: ChatGPT Enterprise and Edu subscribers will have to wait until January to access the feature.

- Unavailable in Certain Regions: Users in the European Union, Switzerland, Iceland, Norway, and Liechtenstein won’t see the update for now, with no confirmed timeline for these regions.

These rollout constraints reflect OpenAI’s ongoing efforts to address compliance, technical readiness, and user feedback as it scales the feature globally.

A Glimpse Into the Future of AI: The CNN Demo

During a recent appearance on CNN’s 60 Minutes, OpenAI President Greg Brockman showcased the capabilities of Advanced Voice Mode with vision. In an engaging segment, Brockman quizzed Anderson Cooper on anatomy using ChatGPT. As Cooper sketched body parts on a blackboard, ChatGPT analyzed his drawings in real-time.

“The location is spot on,” ChatGPT commented, acknowledging that Cooper had correctly placed the brain. It added, “As for the shape, it’s a good start. The brain is more of an oval.”

The demo highlighted both the potential and limitations of the technology. While ChatGPT’s analysis impressed viewers, it made a notable error on a geometry problem, underscoring that it remains susceptible to hallucinations and inaccuracies.

A Long Road to Launch

The journey to this release has been anything but smooth. OpenAI initially promised Advanced Voice Mode with vision back in April, suggesting a rollout “within a few weeks.” However, the feature faced repeated delays, reportedly due to premature announcements and technical hurdles. When Advanced Voice Mode finally arrived in early fall, it debuted without visual capabilities, focusing solely on voice interactions. Since then, OpenAI has worked diligently to refine the technology, expand its availability, and address regional compliance issues, particularly in the EU.

Competition Heats Up

OpenAI isn’t alone in the race to develop real-time, video-capable AI. This week, Google announced a significant step forward with its conversational AI feature, Project Astra, which enables real-time video analysis. Currently, Project Astra is being tested by a select group of Android users. Meta is also reportedly working on similar technology, further fueling competition in the AI space.

A Touch of Holiday Cheer: Santa Mode

In addition to the high-tech rollout, OpenAI added a playful twist to ChatGPT with the launch of “Santa Mode.” This festive feature allows users to interact with ChatGPT in Santa’s voice, bringing a dose of holiday spirit to their conversations. To activate Santa Mode, simply tap or click the snowflake icon next to the prompt bar in the ChatGPT app.

What’s Next for ChatGPT?

With the introduction of Advanced Voice Mode with vision, OpenAI has once again raised the bar for AI-driven tools. By enabling real-time video analysis, the company is opening up new possibilities for education, accessibility, and productivity. However, the technology’s limitations—including occasional errors and a delayed rollout in certain regions—serve as reminders of the challenges inherent in pushing the boundaries of AI.

As OpenAI continues to refine its offerings, the competition from industry giants like Google and Meta will undoubtedly keep the pressure high. For now, though, the addition of vision capabilities cements ChatGPT’s status as a frontrunner in the ever-evolving AI landscape.