At its highly anticipated Apple Event 2024, Apple unveiled an exciting new feature: AI-powered visual search, a groundbreaking tool powered by Apple Intelligence, the company’s advanced suite of artificial intelligence capabilities. This innovative functionality will be rolled out on the upcoming iPhone 16 and iPhone 16 Plus models, offering users an intuitive and efficient way to interact with the world around them.

The Camera Control Button: Unlocking “Visual Intelligence”

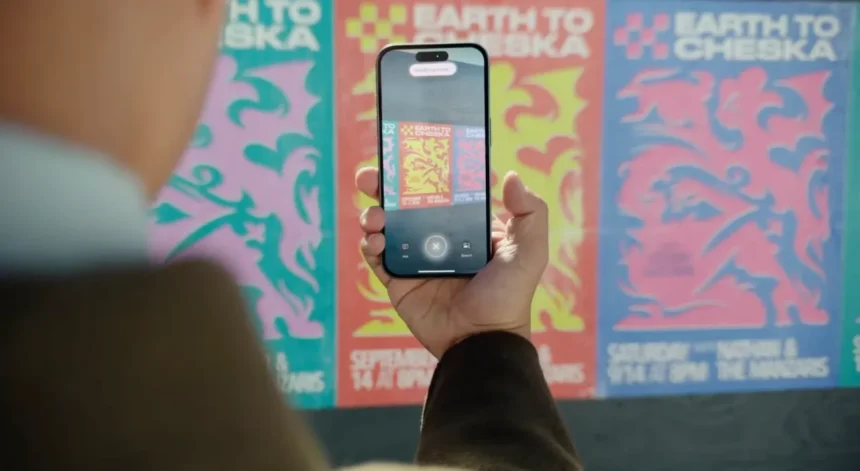

A major highlight of the iPhone 16 and 16 Plus is the addition of a new Camera Control button, which introduces what Apple has dubbed “visual intelligence.” This feature is a game-changer, offering users a seamless reverse image search combined with sophisticated text recognition, transforming everyday images into actionable insights.

Whether you’re trying to identify a restaurant or scan an event flier, visual intelligence has you covered. Imagine snapping a quick picture of a restaurant — visual intelligence will instantly provide key details like opening hours, ratings, menu options, and even allow you to make a reservation directly through the app. It’s all done with a single tap.

Or let’s say you stumble across a flier for an upcoming event. With visual intelligence, you can automatically pull all the relevant information — title, date, time, location — and quickly add it to your calendar. This streamlined process eliminates the need for manual input, saving users time and effort.

Privacy at the Core

Apple continues its strong focus on user privacy with this new feature. The company has emphasized that all images processed via visual intelligence are handled with complete privacy in mind. Apple assures users that no image data will ever be stored on their servers. Every interaction with visual intelligence is designed to be private and secure, reinforcing Apple’s ongoing commitment to protecting user data.

OpenAI Collaboration: ChatGPT at Your Fingertips

In an exciting twist, Apple has also teamed up with OpenAI to expand the capabilities of visual intelligence. Thanks to this partnership, iPhone 16 users will be able to send visual queries directly to ChatGPT via the new Camera Control button. This integration opens up endless possibilities. For instance, stuck on a challenging homework problem? Simply use visual intelligence to scan the question, and send it to ChatGPT for instant help.

This collaboration between Apple and OpenAI brings the power of generative AI to the iPhone in a way that feels both natural and incredibly useful, further enhancing the versatility of the device.

When Will You Get It?

Visual intelligence will be part of a broader rollout of Apple Intelligence features and will be available in beta in October 2024 for U.S. English users. International users can expect to see the feature introduced in December 2024 with a global rollout continuing into early 2025.

This launch marks a significant step forward in Apple’s integration of AI into everyday use, and with privacy-focused, real-world applications, the new visual intelligence feature could quickly become one of the most-used tools on the iPhone.

Whether it’s helping you make dinner reservations or tackling complex homework problems, AI-powered visual search on iPhone 16 is set to elevate the way we interact with our devices — and the world around us.