The Apple Intelligence feature is also on the iPhone 16 series, including the new 16e

Good news for iPhone 15 Pro and Pro Max users! Apple is rolling out a major AI-powered feature—Visual Intelligence—bringing cutting-edge real-time object recognition and search capabilities to last year’s Pro lineup. This feature, Apple’s answer to Google Lens, will make it easier than ever to interact with the world using your iPhone’s camera.

A Powerful Upgrade Without the Hardware Change

One of the biggest reasons people consider upgrading their smartphones is new AI-driven functionalities. However, with Apple extending Visual Intelligence to iPhone 15 Pro models, users won’t need to jump to the iPhone 16 just to get a taste of this innovative tool.

iPhone 16 and 16 Pro users can activate Visual Intelligence seamlessly with a long press of their dedicated camera button. But here’s the catch—the iPhone 15 Pro and Pro Max, as well as the recently announced iPhone 16e, lack this physical camera button. Instead, users will have to rely on an Action button shortcut or a Control Center toggle to access Visual Intelligence. Apple is expected to introduce these options in an upcoming iOS update.

When Will iPhone 15 Pro Users Get Visual Intelligence?

While Apple hasn’t officially confirmed which iOS version will bring Visual Intelligence to the iPhone 15 Pro series, prominent Apple analyst John Gruber of Daring Fireball predicts that it could be introduced in iOS 18.4, which is expected to be available for beta testers very soon.

What Can Visual Intelligence Do?

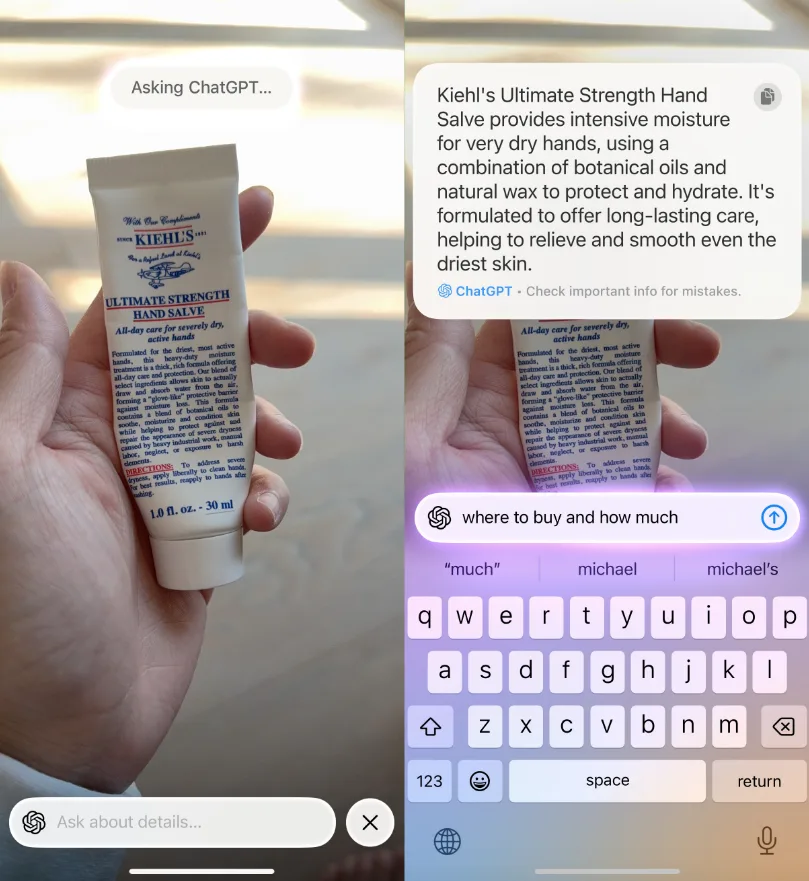

Visual Intelligence is part of the broader Apple Intelligence suite, integrating AI-powered tools directly into iOS. It allows users to point their camera at an object and analyze it in real time. But what makes it truly powerful is its ability to work alongside well-known AI platforms like ChatGPT and Google Image Search.

Here’s how it works:

- Suppose you stumble upon a beautifully patterned set of towels in your home, but you can’t recall where you purchased them. With Visual Intelligence, you can simply activate the feature, choose Google Search, and instantly check if you can find a matching product online.

- Want more details? Select ChatGPT to get information about the towel’s potential brand and where you can order a similar set.

Beyond Object Recognition: More Practical Uses

Visual Intelligence isn’t just about identifying objects—it goes further with additional AI-driven capabilities, including:

- Text Interaction: Translate, summarize, or read aloud text in real time.

- Business Insights: Point your camera at a store or restaurant to view its hours of operation, menu, services, or online purchasing options.

- Enhanced Search Capabilities: Quickly find visually similar items, whether it’s fashion, furniture, or artwork.

Who Will Get It First?

While all iPhone 15 Pro and Pro Max users will eventually receive this feature, those who opt into Apple’s beta program may get early access. Of course, beta software can be unpredictable, so if you prefer a smooth experience, you might want to wait for the official stable release.

Final Thoughts

With Visual Intelligence, Apple is bringing next-gen AI search and object recognition to its 2023 flagship models, reducing the need for an immediate upgrade to the iPhone 16. Whether you’re an everyday user who wants to quickly identify objects or a shopper hunting for that must-have item, this feature is set to enhance your iPhone experience like never before.

Stay tuned for Apple’s official announcement, and if you’re feeling adventurous, keep an eye on the iOS 18.4 beta—it might be arriving any day now!