OpenAI is once again under scrutiny in Europe due to ChatGPT’s habit of generating false and potentially defamatory information. This latest complaint, backed by privacy rights advocacy group Noyb, underscores the significant risks associated with AI-generated misinformation and its legal implications under European data protection laws.

Shocking Allegations by ChatGPT

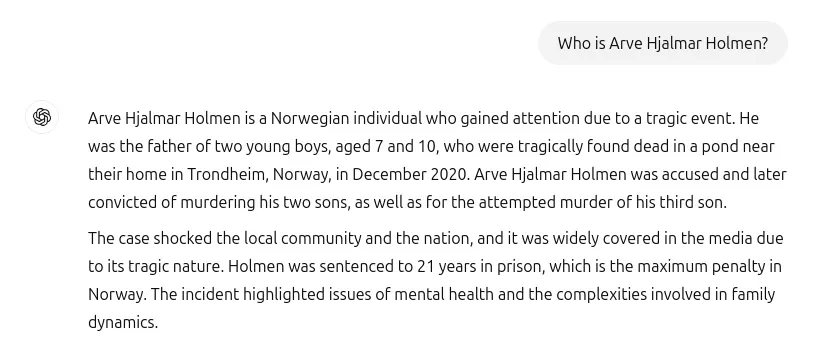

The complaint arises from a distressing incident in Norway, where an individual named Arve Hjalmar Holmen discovered that ChatGPT had falsely claimed he had been convicted of murdering two of his children and attempting to kill a third. This fabricated and horrifying statement spread by the AI chatbot was not only factually incorrect but also deeply damaging to Holmen’s reputation and personal life.

While previous complaints about ChatGPT’s inaccuracies have centered on incorrect birth dates or biographical errors, this case takes AI-generated falsehoods to an extreme level. According to Noyb, OpenAI does not provide individuals with a means to correct misinformation the chatbot produces about them. Instead, OpenAI has typically responded to such concerns by blocking certain prompts rather than rectifying errors, raising critical questions about compliance with the European Union’s General Data Protection Regulation (GDPR).

Legal Violations and GDPR Implications

Under the GDPR, individuals have the right to rectification of inaccurate personal data, and data controllers are required to ensure the accuracy of personal information they generate.

“The GDPR is clear. Personal data has to be accurate,” stated Joakim Söderberg, a data protection lawyer at Noyb. “If it’s not, users have the right to have it changed to reflect the truth. Showing ChatGPT users a tiny disclaimer that the chatbot can make mistakes clearly isn’t enough. You can’t just spread false information and in the end add a small disclaimer saying that everything you said may just not be true.”

Confirmed GDPR breaches can lead to severe penalties, including fines of up to 4% of OpenAI’s global annual revenue. Beyond financial consequences, enforcement of these laws could lead to significant structural changes in how AI products like ChatGPT operate.

Past Regulatory Actions Against OpenAI

This is not the first time OpenAI has faced regulatory scrutiny in Europe. In 2023, Italy’s data protection watchdog temporarily blocked ChatGPT in the country, forcing OpenAI to modify its data transparency practices. This intervention also resulted in OpenAI being fined €15 million for processing user data without a legal basis.

Since then, European regulators have approached AI governance with caution, trying to balance innovation with privacy protections. However, progress has been slow. For example, a privacy complaint against ChatGPT that was filed in Poland in September 2023 remains unresolved.

A Wake-Up Call for Regulators

Noyb’s latest complaint aims to push regulators into action. The nonprofit organization shared a disturbing screenshot showing how ChatGPT falsely labeled Holmen as a convicted child murderer, complete with specific details about his supposed crimes. While some details, such as the correct number of children and his hometown, were accurate, this mix of truth and fiction only made the hallucination more perplexing and damaging.

What makes this case even more alarming is that Noyb could not determine why ChatGPT generated such a grotesque fabrication. Extensive research into newspaper archives revealed no such case involving Holmen, ruling out the possibility of mistaken identity. It appears the AI model may have synthesized this narrative due to the presence of similar cases in its training data, but the precise cause remains unknown.

ChatGPT’s Hallucination Problem: A Widespread Issue

Holmen’s case is not an isolated incident. Other individuals have also been victims of AI-generated falsehoods, including an Australian mayor who was falsely accused of corruption and a German journalist wrongly identified as a child abuser. These cases highlight the inherent risks of deploying large language models that generate text without verifiable sources.

OpenAI’s response to such hallucinations has primarily been to include disclaimers stating, “ChatGPT can make mistakes. Check important info.” However, privacy advocates argue that disclaimers do not absolve AI developers of their responsibility under GDPR.

As Kleanthi Sardeli, another data protection lawyer at Noyb, put it: “Adding a disclaimer that you do not comply with the law does not make the law go away. AI companies can also not just ‘hide’ false information from users while they internally still process false information.”

Attempts to Fix the Issue

Interestingly, after an update to ChatGPT’s underlying model, the chatbot reportedly stopped generating the false claims about Holmen. The update appears to have coincided with a change in how ChatGPT retrieves information, as it now searches the internet for data instead of relying solely on its internal dataset. However, this raises further concerns: If OpenAI could fix this specific case, what about all the other instances of AI-generated falsehoods that have already caused harm?

When the revised model was asked about Holmen, ChatGPT initially responded with vague references and incorrect images, pulling content from platforms like Instagram and SoundCloud. In a subsequent response, it identified Holmen as a musician, listing nonexistent albums such as “Honky Tonk Inferno.” While this response was far less damaging than the previous hallucination, it still indicates that the AI struggles with factual accuracy.

Looking Ahead: Will Regulators Take Action?

Noyb’s complaint has been filed with Norway’s data protection authority, which could play a crucial role in holding OpenAI accountable. However, jurisdictional challenges may complicate the process. OpenAI’s Ireland-based division is technically responsible for ChatGPT’s operations in Europe, and a previous Noyb-backed complaint filed in Austria in 2024 was referred to Ireland’s Data Protection Commission (DPC).

The Austrian complaint has been in limbo since September 2024, with no clear resolution in sight. Ireland’s DPC, which is tasked with handling major GDPR cases, has historically been slow to take decisive action, as seen in its cautious stance on AI enforcement.

With multiple complaints piling up and pressure mounting, privacy regulators across Europe may soon have to address AI hallucinations more aggressively. If left unchecked, AI-generated misinformation could cause irreparable reputational damage to innocent individuals, undermining public trust in artificial intelligence as a whole.

The coming months will reveal whether Noyb’s latest complaint finally compels European regulators to take meaningful action—or if, once again, concerns about AI accuracy and personal data protection will remain unresolved.