Researchers say that despite a confident tone, OpenAI’s chatbot search gave ‘partially or entirely incorrect responses’ to most of their requests.

When OpenAI rolled out its web-search tool in October 2024 for ChatGPT Plus subscribers, it promised “fast, timely answers with links to relevant web sources.” The feature seemed like a game-changer for AI-assisted research, offering users the ability to stay informed with real-time, sourced information. However, a recent investigation by Columbia University’s Tow Center for Digital Journalism has revealed significant flaws in the tool’s performance, raising questions about its reliability and truthfulness.

The Study: Testing ChatGPT’s Accuracy

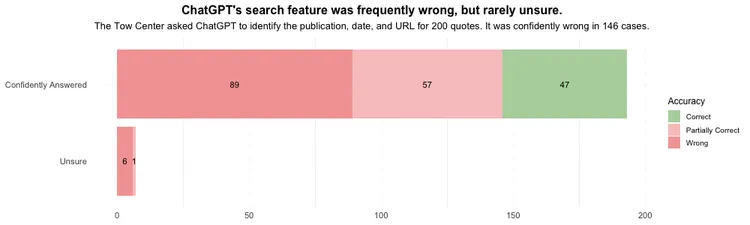

The researchers conducted a detailed analysis to assess the tool’s ability to identify the origins of quoted material. Their test involved two hundred quotes from twenty different publications, including sources that explicitly prohibit OpenAI’s search crawler from accessing their content. Surprisingly, the results painted a troubling picture of inaccuracy and overconfidence in the tool’s responses.

Of the 200 quotes tested:

- 153 responses were partially or completely incorrect.

- Only 7 responses admitted uncertainty, using phrases like “it appears,” “it’s possible,” or “I couldn’t locate the exact article.”

- Many responses confidently cited false or irrelevant sources, presenting incorrect information as fact.

For instance, ChatGPT misattributed a letter-to-the-editor published in the Orlando Sentinel to a Time magazine article. In another case, when asked to identify the origin of a quote from a New York Times article on endangered whales, it cited a completely unrelated website that had plagiarized the original story.

Missteps in Misattribution

The most concerning issue identified in the study was ChatGPT’s inability to recognize or admit when it lacked sufficient information. Instead of flagging uncertainty, it often provided false details with unearned confidence. This tendency is particularly problematic when users rely on the tool for research or fact-checking.

The tool’s limitations became more evident when it attempted to access content from publishers who had explicitly disallowed OpenAI’s web crawler. Despite being unable to access the original articles, ChatGPT still produced responses, many of which were fabricated or misleading.

OpenAI’s Response to Criticism

When approached for comment, OpenAI downplayed the severity of the findings, describing the Tow Center’s methodology as “atypical” and arguing that the study did not account for certain contextual factors. “Misattribution is hard to address without the data and methodology that the Tow Center withheld,” the company told the Columbia Journalism Review.

Despite this, OpenAI acknowledged the importance of improving accuracy, promising to “keep enhancing search results.” The company emphasized its commitment to transparency and continuous refinement of the tool’s capabilities.

What Does This Mean for Users?

For subscribers relying on ChatGPT’s search tool, these findings underscore the importance of verifying information independently. While the tool offers impressive potential for summarizing content and providing quick links, it is far from foolproof. Users should approach its responses with caution, particularly when accuracy is critical.

The Tow Center’s findings also highlight a broader issue in AI-generated content: the risk of spreading misinformation through seemingly authoritative responses. Without clear disclaimers or indicators of uncertainty, users may inadvertently trust false information, amplifying its impact.

The Road Ahead for AI Search Tools

AI search tools like ChatGPT’s represent a step forward in natural language processing and information retrieval, but their success hinges on striking the right balance between usability and accuracy. Misattribution, overconfidence, and a lack of transparency are challenges OpenAI—and the broader AI industry—must address to maintain user trust.

For now, the promise of “fast, timely answers” remains aspirational. Until OpenAI delivers on its commitment to improvement, users are advised to treat ChatGPT’s search results as a starting point rather than a definitive source.

This ongoing debate serves as a reminder: even the most advanced AI systems are works in progress. And when it comes to seeking the truth, there’s no substitute for critical thinking and thorough verification.