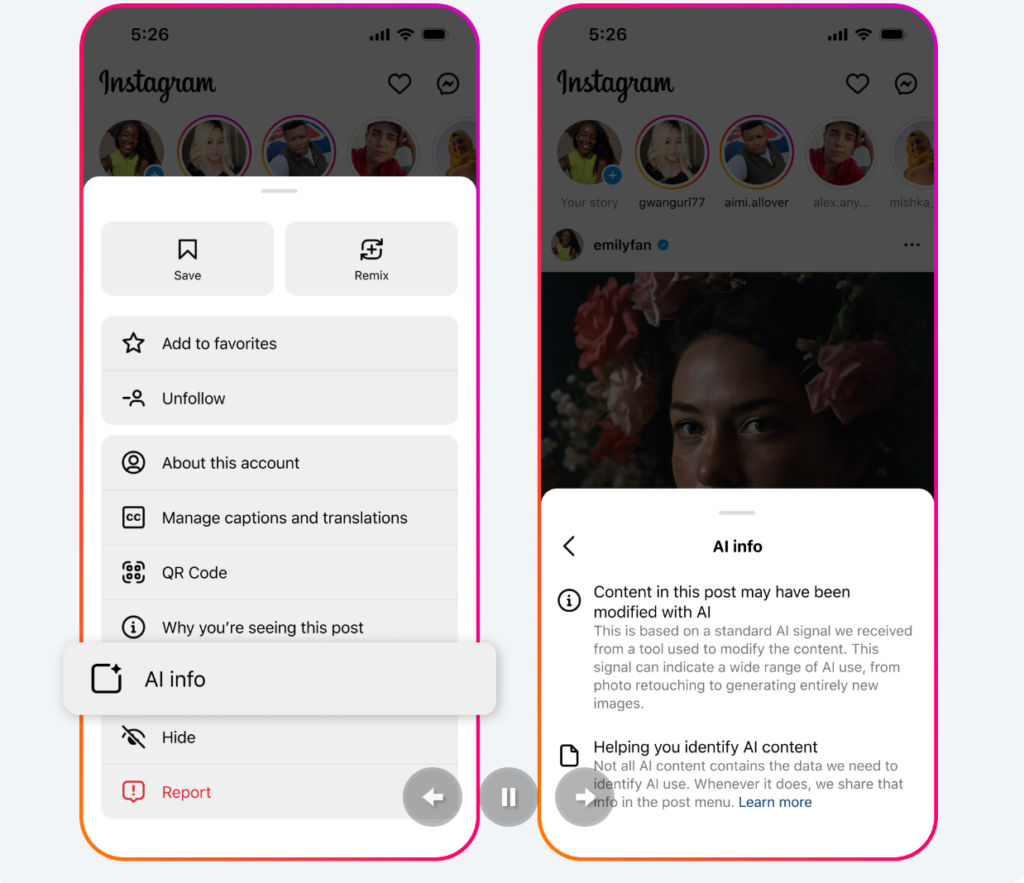

Meta is making significant changes to how it labels content that has been edited or modified using AI tools across its platforms, including Instagram, Facebook, and Threads. In a shift that could impact how users perceive AI-altered content, the company is relocating the “AI info” label from its prominent position under a user’s name to the post’s menu for content that has been modified by AI tools.

Previously, the AI label would appear clearly beneath the creator’s name, immediately signaling to users that the post had been edited or enhanced with AI. With this update, while the label will still appear on posts that Meta detects as generated entirely by AI, it will now be tucked away for content that has only been edited by AI-powered tools. This change in labeling practice could make it more challenging for users to identify AI-enhanced content at first glance.

However, Meta assures that content fully generated by AI prompts will still prominently display the label. According to the company, this approach provides a clearer distinction between content created by AI versus content merely edited by AI tools. Meta will also disclose whether AI-generated content was identified through industry-standard detection methods or based on self-disclosure by the creator.

Why the Change?

Meta says the goal of this update, which will roll out next week, is to offer users a more accurate reflection of the extent to which AI has been used in content creation. As AI tools become increasingly integrated into creative workflows, ranging from minor photo edits to fully automated content generation, Meta believes that its labeling system should better differentiate between these varying degrees of AI use.

But this move may raise concerns. By placing the AI info label in a less visible location, some worry that users could be more easily misled by content that was edited using AI tools. As AI-powered editing becomes more sophisticated, the line between human creativity and machine assistance can blur, potentially leading to confusion or a loss of trust in content authenticity.

A History of Evolving Labels

This isn’t Meta’s first attempt to fine-tune its AI labeling. As generative AI technology continues to evolve, so too has Meta’s approach to identifying and labeling such content. In July, the company transitioned from using the label “Made with AI” to the more generalized “AI info.” The change came in response to feedback from professional photographers who reported that the original label was being applied to real photos that had only been edited with AI, not generated from scratch.

Meta acknowledged that the initial wording wasn’t clear enough, leading to misinterpretations. Users, they said, didn’t fully understand that content flagged with the “Made with AI” label might not have been created by an AI program, but rather enhanced with AI tools. The shift to “AI info” was meant to address that confusion, offering a more nuanced way to inform users about how AI contributed to the creation of the content.

Implications for the Future

As AI tools continue to reshape content creation across digital platforms, the balance between transparency and user experience becomes ever more crucial. Meta’s latest adjustment could spark further debate about how content modified by AI should be presented to audiences and whether such tools should remain more visible in the digital ecosystem.

Meta’s decision to make AI info labels harder to find for edited content could be seen as a move that prioritizes user interface cleanliness over transparency. As AI-powered tools become increasingly ubiquitous and sophisticated, the challenge will lie in ensuring that users can continue to trust the content they consume, even as the boundaries between human creativity and machine assistance continue to blur.

For now, Meta’s changes seem designed to strike a balance between providing the necessary information about AI involvement while not overwhelming users with labels. However, as the use of AI in content creation expands, it remains to be seen whether this more subtle approach will be enough to maintain trust and understanding among its vast user base.