In a bold weekend move, Meta has officially launched Llama 4, its latest collection of cutting-edge AI models in the evolving Llama family. The release marks a significant leap forward in Meta’s AI roadmap, showcasing breakthroughs in performance, efficiency, and multimodal capabilities — all aimed at powering the next generation of intelligent digital experiences.

Introducing the Llama 4 Family

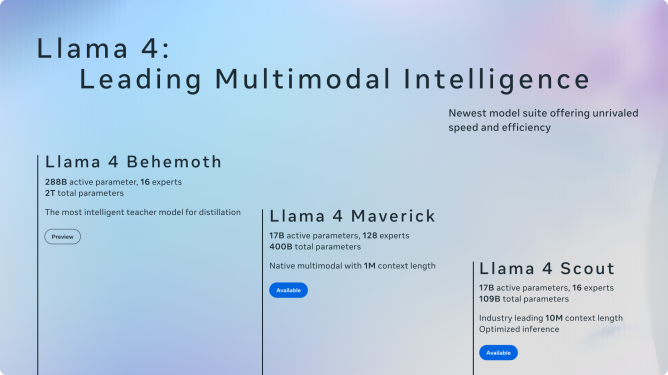

The Llama 4 lineup features three distinct models:

- Llama 4 Scout

- Llama 4 Maverick

- Llama 4 Behemoth (still in training)

Each model was trained on an expansive dataset of unlabeled text, images, and videos to build robust, multimodal comprehension. Meta describes the collection as having “broad visual understanding,” underpinned by innovative architecture and significantly larger parameter counts than previous Llama releases.

What’s New in Llama 4?

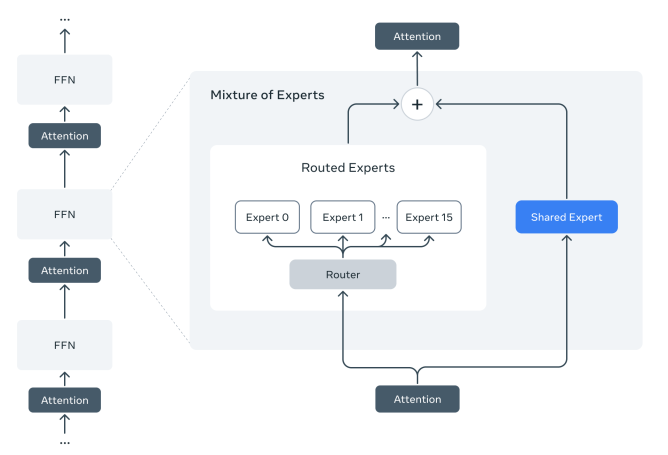

For the first time, Meta has employed a Mixture of Experts (MoE) architecture across all Llama 4 models. This approach splits complex tasks into smaller components, routing them through specialized sub-models, or “experts.” The result is improved training efficiency and optimized inference performance without a proportional increase in compute requirements.

Take Maverick, for example: it boasts an impressive 400 billion total parameters, yet only 17 billion active parameters are used per query, distributed across 128 experts. Meanwhile, Scout features 109 billion total parameters with 17 billion active parameters and 16 experts. These models are designed to deliver high-quality performance with significantly more computational efficiency.

Use Cases and Capabilities

According to Meta, Maverick excels in general assistant and conversational scenarios, including coding support, reasoning, multilingual understanding, and visual interpretation. It has reportedly outperformed models like GPT-4o and Gemini 2.0 in internal benchmarks — although it still trails slightly behind top-tier models such as GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.5 Pro.

Scout, on the other hand, shines in use cases requiring extensive memory and document handling. With a massive 10 million-token context window, Scout can process extremely long documents, perform deep summarization, and navigate complex codebases — all on a single Nvidia H100 GPU, making it surprisingly accessible for developers.

Meta’s third model, Behemoth, is still undergoing training but is already generating buzz. The model has a colossal 1.9 trillion parameters in total, with 288 billion active across 16 experts. Behemoth is designed for heavy-duty tasks like mathematical problem-solving and STEM applications. Meta claims it outperforms top models like GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Pro in several STEM-focused evaluations — though Gemini 2.5 Pro remains ahead in some categories.

Model Availability and Licensing

Llama 4 Scout and Maverick are now publicly available via Llama.com and select platforms including Hugging Face. However, Behemoth remains in development and will be released at a later stage.

Developers and companies looking to deploy Llama 4 should be aware of license limitations. Entities located or headquartered in the European Union are barred from using or distributing Llama 4 models — likely due to compliance issues with the EU’s strict AI and data privacy regulations. Additionally, companies with over 700 million monthly active users must obtain special licensing from Meta, which retains full discretion over approvals.

Integration Across Meta’s Ecosystem

Meta has already integrated Llama 4 into Meta AI, its intelligent assistant available in WhatsApp, Messenger, Instagram, and other products. Currently, the updated assistant is live in 40 countries, with multimodal capabilities available in English and limited to U.S. users for now.

A Shift in AI Responsiveness

One of the more notable changes in Llama 4 is its approach to sensitive or contentious questions. Unlike earlier models, which were often overly cautious or declined to answer politically charged queries, Llama 4 is designed to be more open and balanced. According to Meta, the model is tuned to provide helpful, factual responses across a broader range of perspectives without injecting judgment or bias.

This move comes amid rising political scrutiny around AI bias. Critics — including high-profile figures close to former President Donald Trump and tech entrepreneur Elon Musk — have accused popular AI models of being overly “woke” and biased against conservative viewpoints. In response, AI companies, including Meta and OpenAI, have been working to broaden their models’ ability to respond to controversial topics with nuance, transparency, and factual integrity.

Final Thoughts

Llama 4 represents a significant evolution in Meta’s open-source AI strategy. With its shift to MoE architecture, expanded multimodal capabilities, and commitment to handling a broader range of queries, Meta is positioning the Llama family as a formidable contender in the rapidly advancing world of AI.

As Meta puts it:

“These Llama 4 models mark the beginning of a new era for the Llama ecosystem. This is just the beginning.”

With Behemoth still in development and future iterations likely in the pipeline, Meta is making it clear that the race to build the most capable, transparent, and accessible AI is far from over — and Llama 4 is just getting started.