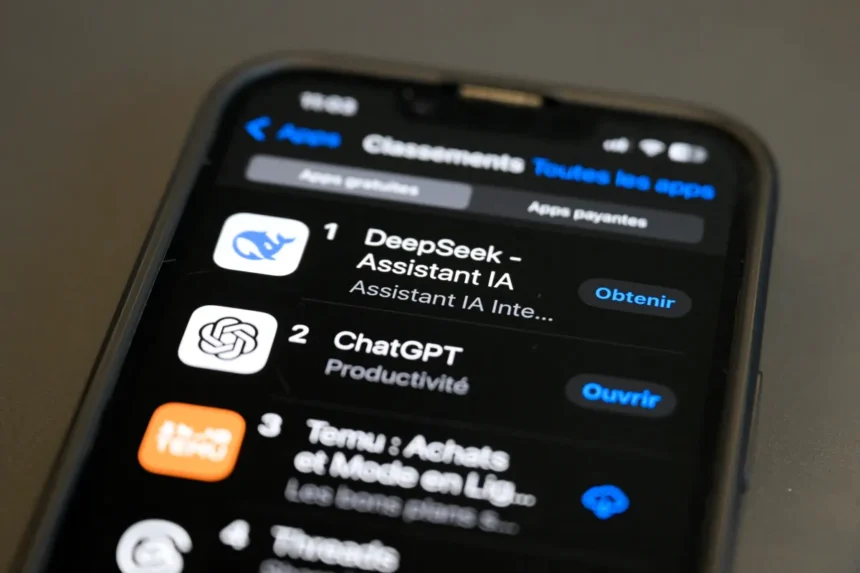

The world of artificial intelligence is no stranger to controversy, and the latest storm centers around Chinese AI company DeepSeek. Just hours after entrepreneur and investor David Sacks alleged that DeepSeek may have used OpenAI’s models to train its own, Bloomberg Law reported that Microsoft has launched an investigation into the company’s activities.

Microsoft’s security researchers suspect that DeepSeek, the company behind the R1 reasoning model, might have extracted large volumes of data through OpenAI’s API during the fall of 2024. Given Microsoft’s significant stake in OpenAI, the tech giant quickly flagged the suspicious activity and alerted OpenAI to the potential breach.

Did DeepSeek Violate OpenAI’s Terms of Service?

While OpenAI allows developers to access its API to build applications, its terms of service explicitly prohibit using API-generated output to train competing AI models. The policy states:

“You are prohibited from […] using Output to develop models that compete with OpenAI.”

Furthermore, OpenAI also forbids the automated or programmatic extraction of data from its API, a rule designed to prevent mass data harvesting. These restrictions exist to protect OpenAI’s proprietary technology from being reverse-engineered or repurposed by other AI labs seeking to develop rival models.

The Role of Model Distillation in AI Development

One of the key concerns in this investigation is whether DeepSeek leveraged a technique known as model distillation—a method where one AI model (the student) learns from the responses of a more advanced model (the teacher). This process allows developers to transfer knowledge and capabilities from a high-performing AI system into a smaller, more efficient one.

If DeepSeek indeed utilized OpenAI’s API in this way, it could have effectively trained its own models using OpenAI’s intellectual property while bypassing the traditional, resource-intensive process of training a model from scratch. The question now is whether DeepSeek found a way to circumvent OpenAI’s rate limits and query its API at an industrial scale.

Potential Legal and Industry Ramifications

If Microsoft’s suspicions are confirmed, DeepSeek could face serious legal and financial consequences. OpenAI has robust legal agreements in place to prevent API misuse, and any proven violations could result in lawsuits, hefty fines, or outright bans from accessing OpenAI’s technology.

Beyond the legal ramifications, this case could send shockwaves through the AI industry. If companies are found exploiting major AI models for their own gain, it could lead to stricter enforcement of API policies, more stringent access controls, and a general reevaluation of how AI companies protect their intellectual property.

The Bigger Picture: China, AI, and Global Competition

This investigation also highlights broader tensions in the AI space, particularly the ongoing race between U.S. and Chinese firms to dominate the industry. With AI becoming a crucial geopolitical asset, companies and governments alike are closely monitoring potential IP theft, regulatory violations, and unauthorized technology transfers.

DeepSeek, a rising player in China’s AI scene, has been pushing boundaries with its research and development. However, if it is found to have overstepped ethical or legal lines, it could face not only legal action but also reputational damage that may impact its partnerships, funding, and access to future technology.

What’s Next?

As the investigation unfolds, several key questions remain unanswered:

- Did DeepSeek systematically extract OpenAI-generated data to train its own models?

- If so, how did it manage to bypass OpenAI’s safeguards?

- What legal actions, if any, will OpenAI or Microsoft pursue?

- How will this impact the broader AI industry and the regulatory landscape?

With AI development progressing at breakneck speed, the outcome of this case could set important precedents for how companies enforce data protection, IP rights, and ethical AI development.

For now, all eyes are on Microsoft, OpenAI, and DeepSeek as the tech industry waits to see how this high-stakes AI showdown will play out.