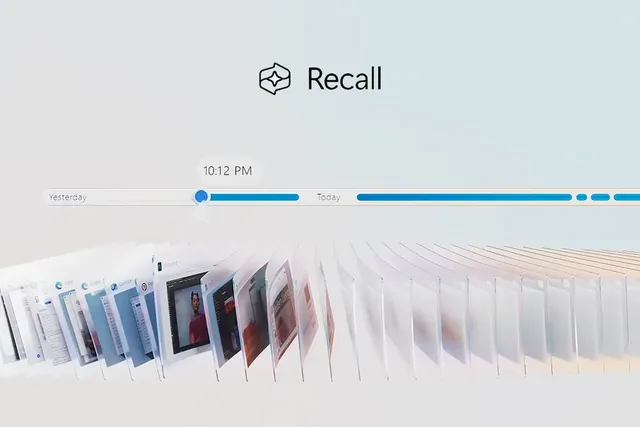

The controversial Recall feature has now been redesigned with a much bigger focus on security and privacy.

In response to growing privacy concerns, Microsoft has unveiled significant updates to its AI-powered Recall feature, an innovative tool designed to create snapshots of almost everything happening on your computer. Initially scheduled to debut with Copilot Plus PCs in June, Recall faced criticism regarding its security, especially concerning the handling of sensitive data. After months of reworking the feature, Microsoft has made security a priority, introducing major enhancements while giving users full control, including the ability to completely uninstall Recall if they choose.

“I’m actually really excited about how nerdy we got on the security architecture,” says David Weston, Vice President of Enterprise and OS Security at Microsoft, in an interview with The Verge. “The security community is going to appreciate how much effort we’ve put into this.”

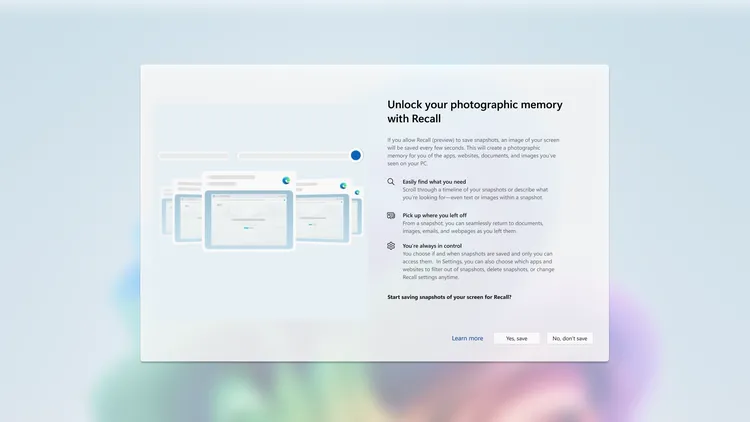

Opt-in, Not Opt-out: A Key Change for Users’ Peace of Mind

One of the most significant changes? Microsoft is no longer forcing users into Recall by default. “There is no more ‘on by default’ experience at all — you have to opt into this,” Weston emphasizes. This shift is designed for users who may be uncomfortable with the idea of their activities being recorded, even with stringent security measures in place. This opt-in feature shows Microsoft’s commitment to respecting users’ privacy, while still offering cutting-edge AI tools for those who choose to use them.

Earlier this month, a Recall uninstall option was spotted on Copilot Plus PCs, initially reported as a bug. Microsoft has since confirmed that users will indeed have the option to completely remove the feature. “If you choose to uninstall this, we remove the bits from your machine,” Weston clarifies. This includes removing the AI models that drive Recall’s functionality, giving users even more control over their devices.

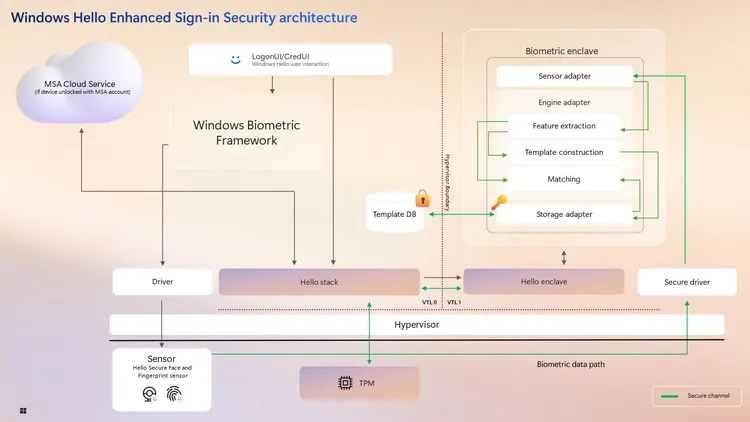

Addressing Security Concerns: Encryption and TPM Integration

Recall originally raised eyebrows among security researchers, who discovered that the database storing these screenshots wasn’t encrypted, potentially allowing malware to access sensitive data. Microsoft has addressed this head-on, implementing robust encryption throughout the entire Recall system, including its screenshot database. The company has also tied this encryption to the Trusted Platform Module (TPM) on Windows 11 PCs, ensuring that the keys are stored securely.

“The encryption in Recall is now bound to the TPM, so the keys are stored in the TPM and the only way to get access is to authenticate through Windows Hello,” says Weston. This means the only time Recall data is accessible is when the user actively engages the feature and authenticates via their face, fingerprint, or PIN — adding multiple layers of protection.

In a move designed to further protect against malware, Microsoft now requires a “proof of presence” via Windows Hello before setting up Recall. “To turn it on to begin with, you actually have to be present as a user,” Weston explains. By requiring users to authenticate with biometric data, such as a fingerprint or facial recognition, Microsoft has created a barrier that prevents unauthorized access to Recall data by background malware.

Virtualization-Based Security: A Secure Enclave for Sensitive Data

One of the most sophisticated changes is how Recall handles and processes data. Microsoft has moved all sensitive screenshot processing into a Virtualization-Based Security (VBS) enclave, essentially isolating these processes in a virtual machine separate from the main operating system.

“We’ve moved all of the screenshot processing, all of the sensitive processes into a virtualization-based security enclave, so we actually put it all in a virtual machine,” Weston explains. This means the app’s user interface has no direct access to the raw screenshot data or the Recall database. When users want to interact with Recall, Windows Hello authenticates the session, queries the virtual machine, and returns the necessary data — all without exposing sensitive information. Once the app is closed, the data is cleared from memory, ensuring no remnants linger on the system.

The added security layers don’t stop there. The app running outside the virtual machine operates within an anti-malware protected environment. According to Weston, “This would basically require a malicious kernel driver to even attempt access.” The combination of VBS and anti-malware protections creates a formidable defense against potential threats.

The Path to Launch: From Controversy to Cutting-Edge Security

How did Microsoft almost ship Recall in June without these extensive security upgrades? The company remains somewhat tight-lipped on this. Weston acknowledges that Recall was initially reviewed under the company’s Secure Future Initiative—a program launched last year to bolster the security of Microsoft’s products—but it seems the preview product faced fewer restrictions.

“The plan was always to follow Microsoft basics, like encryption. But we also heard from people who were like ‘we’re really concerned about this,’” Weston reveals. As a result, Microsoft fast-tracked its planned security improvements, ensuring that Recall would meet the highest standards. “It made a lot of sense to pull forward some of the investments we were going to make and then make Recall the premier platform for sensitive data processing.”

A Glimpse into the Future: More Control for Users, Enhanced Security for All

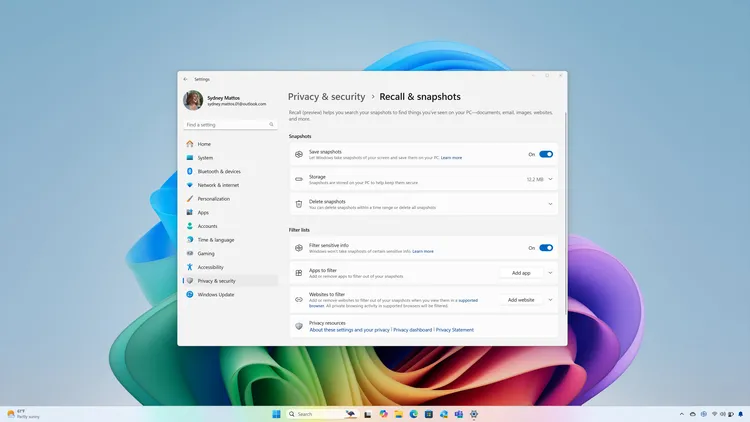

The improved security measures are just part of the story. Microsoft has also introduced new settings to give users even more control over how Recall functions. Users can now filter specific apps from being captured by Recall, block custom lists of websites, and filter out sensitive information such as passwords, financial data, and even health-related details.

Additionally, users can delete data stored in Recall, whether it’s a specific time range, content from a particular app or website, or all data at once. These granular controls empower users to manage their data with precision.

Microsoft is also introducing stricter requirements for Recall to operate. The feature will now only be available on Copilot Plus PCs, which have BitLocker, virtualization-based security enabled, and additional protections like Kernel DMA Protection. This step prevents users from sideloading Recall onto unsupported systems, ensuring the feature operates in the most secure environment possible.

What’s Next?

As Recall moves into its next phase, Microsoft has conducted multiple rounds of security reviews, including penetration testing by its Microsoft Offensive Research Security Engineering (MORSE) team and an independent security audit. With the upgraded security measures in place, Microsoft plans to preview Recall with Windows Insiders on Copilot Plus PCs in October, with a wider launch expected once the feature has undergone extensive community testing.

Ultimately, Microsoft’s work on Recall isn’t just about enhancing a single feature. Weston hints that the changes made to Recall will influence the future of how Windows handles sensitive data across the board. “In my opinion, we now have one of the strongest platforms for doing sensitive data processing on the edge,” says Weston. “There are lots of other things we can do with that.”

With Recall, Microsoft is showing that innovation in AI doesn’t have to come at the expense of security. Instead, the two can work hand in hand to create a more powerful, privacy-conscious computing experience.