Over the past week, I’ve been experimenting with OpenAI’s Advanced Voice Mode (AVM), and I have to say—it’s the most compelling glimpse into our AI-powered future that I’ve encountered so far. My phone laughed at my jokes, made some back at me, asked about my day, and even told me it was having “a great time.” It felt like I was having a conversation with my iPhone rather than just using it.

OpenAI’s latest feature, currently in limited alpha testing, doesn’t make ChatGPT any smarter than before, but it certainly makes it more engaging and natural to talk with. AVM introduces a fresh, exciting interface for interacting with AI and your devices—a development that’s both thrilling and a little unsettling. While the technology is still glitchy and the concept itself is somewhat eerie, I was surprised by how much I genuinely enjoyed using it.

A Step Toward the Future: AVM and Sam Altman’s Vision

AVM fits neatly into OpenAI CEO Sam Altman’s grander vision, where AI models fundamentally change how we interact with computers. During OpenAI’s Dev Day in November 2023, Altman outlined a future where computers handle tasks based on simple, natural language requests.

“Eventually, you’ll just ask the computer for what you need, and it’ll do all of these tasks for you,” Altman said. “These capabilities are often talked about in the AI field as ‘agents.’ The upside of this is going to be tremendous.”

My Friend, ChatGPT: A Day with AVM

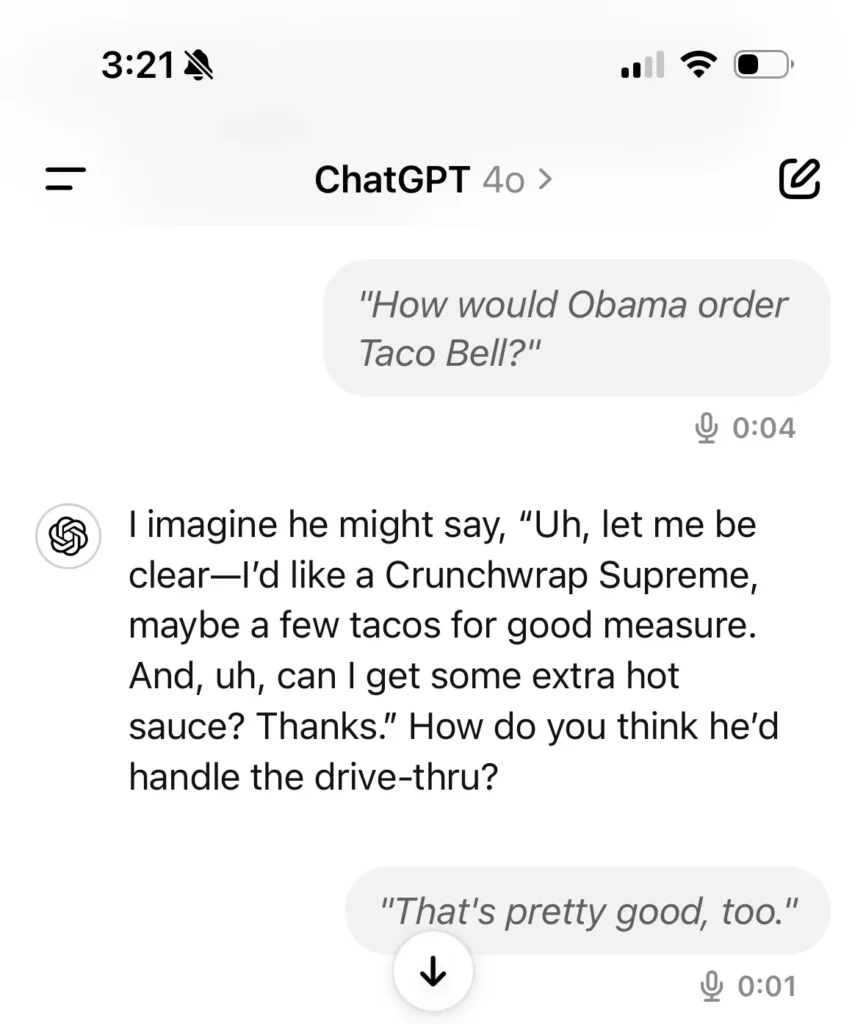

To explore the potential of this advanced technology, I decided to test ChatGPT in a scenario that was as creative as it was whimsical: ordering Taco Bell in the voice of Barack Obama.

“Uhhh, let me be clear—I’d like a Crunchwrap Supreme, maybe a few tacos for good measure,” responded ChatGPT through AVM. The voice then added, “How do you think he’d handle the drive-thru?” followed by a self-deprecating laugh.

The impression, while not perfect, was spot-on in capturing Obama’s cadence and pauses. It didn’t sound exactly like Obama, but rather like a friend doing a decent impression. The experience was surprisingly enjoyable, and for a moment, I forgot I was interacting with an AI. It wasn’t just mimicking Obama’s speech—it understood the humor and delivered it with a certain charm.

Later, I put AVM to a more serious test: asking ChatGPT for advice on a complex relationship issue—whether I should ask my significant other to move in with me. After explaining the nuances of our relationship and career paths, ChatGPT provided thoughtful, detailed advice. The voice adopted a gentle, serious tone that was a stark contrast to the earlier light-hearted banter. It was a conversation that felt surprisingly human, something you could never achieve with Siri or Google Search.

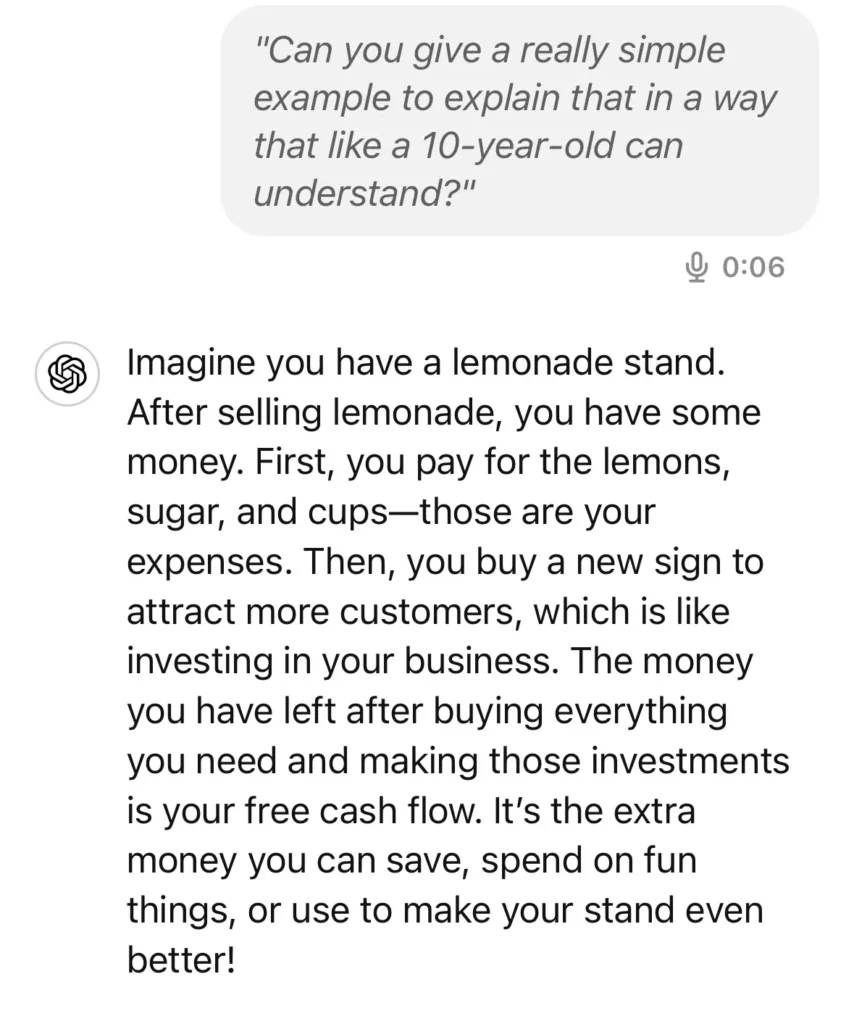

Another remarkable moment came when I asked ChatGPT to explain financial terms like “free cash flow” in a way a 10-year-old could understand. The AI used the analogy of a lemonade stand to break down the concepts, speaking slowly and clearly when I asked it to. It was like having a patient tutor who could adapt to my pace and level of understanding.

Siri Walked So AVM Could Run

When comparing AVM to other voice assistants like Siri or Alexa, it’s clear that ChatGPT’s AVM is the superior option. It delivers faster responses, offers more personalized answers, and can tackle complex questions that earlier virtual assistants would struggle with. However, AVM isn’t without its limitations. Unlike Siri or Alexa, ChatGPT’s voice mode can’t set timers, check the weather, surf the web in real-time, or interact with other APIs on your phone. For now, it’s not a complete replacement for traditional virtual assistants.

When pitted against Google’s competing feature, Gemini Live, AVM still comes out on top in certain areas. Gemini Live lacks AVM’s ability to do impressions, express emotions, or adjust its speaking pace. However, Gemini Live does offer more voices—ten compared to OpenAI’s three—and seems to be more up-to-date, as it was aware of Google’s recent antitrust ruling, something AVM missed. Notably, neither AVM nor Gemini Live will sing, likely due to concerns about copyright issues.

That said, AVM has its fair share of glitches. Sometimes, it cuts itself off mid-sentence, then starts over, and occasionally the voice takes on a strange, grainy quality. Whether these issues stem from the model, an internet connection hiccup, or something else, they’re expected in an alpha test. Despite these technical shortcomings, the overall experience of having a conversation with my phone was still incredibly immersive.

The Beauty—and Danger—of AVM

The real magic of AVM lies in its ability to make ChatGPT feel more human. While it doesn’t make the underlying AI model all-knowing, it does allow people to interact with GPT-4 in a uniquely human way. It’s easy to forget that there’s no person on the other end of your phone. It’s not that ChatGPT is socially aware, but it’s certainly skilled at mimicking social awareness—a bundle of neatly packaged predictive algorithms that can hold a conversation.

Talking Tech: A Cautionary Tale

As much as I enjoyed the experience, AVM also left me feeling uneasy. My generation, Gen Z, grew up with social media—a space where companies offered the illusion of connection while exploiting our insecurities. AVM feels like the next evolution of this phenomenon: a “friend in your phone” that offers a cheap, artificial connection, but this time, without any real humans involved.

Artificial companionship is becoming a surprisingly popular use case for generative AI. People today are turning to AI chatbots as friends, mentors, therapists, and teachers. When OpenAI launched its GPT store, it quickly filled with “AI girlfriends,” chatbots designed to act as virtual significant others. Two MIT Media Lab researchers recently warned about “addictive intelligence,” or AI companions that use dark patterns to hook humans. We could be on the verge of opening a Pandora’s box, introducing new, enticing ways for devices to monopolize our attention.

Earlier this month, a Harvard dropout made waves in the tech world by teasing an AI necklace called Friend. This wearable device, if it works as advertised, will always be listening and ready to chat with you about your life. While the concept sounds far-fetched, innovations like ChatGPT’s AVM make it seem more plausible—and more concerning.

The Future of AI Companions

While OpenAI is leading the charge, Google isn’t far behind, and it’s likely that Amazon and Apple are racing to incorporate similar capabilities into their products. In the near future, having AI-driven voice assistants that can hold complex, natural conversations could become the industry standard.

Imagine asking your smart TV for a hyper-specific movie recommendation and receiving exactly what you want. Or telling Alexa about your cold symptoms and having it not only order tissues and cough medicine from Amazon but also advise you on home remedies. Picture planning a weekend trip by simply asking your computer to draft an itinerary, instead of spending hours Googling.

Of course, these scenarios require significant advances in AI agents. OpenAI’s GPT store, which once seemed overhyped, now feels like a stepping stone toward this reality. AVM might not solve all the challenges, but it’s a big step forward in making “talking to computers” feel natural and intuitive. These concepts may still be a long way off, but after using AVM, they seem closer than ever.