AI models have demonstrated remarkable capabilities across various domains, but the key question remains: what tasks should we trust them with? Ideally, AI should be relegated to the drudgery that bogs down human potential — and there’s no shortage of that in research and academia. Reliant, a promising new player in the AI landscape, is poised to tackle the time-consuming data extraction work that has long been the domain of weary graduate students and interns.

“The best application of AI is one that enhances human experience by reducing menial labor, allowing people to focus on what truly matters,” said CEO Karl Moritz. Having spent years in the research world alongside co-founders Marc Bellemare and Richard Schlegel, Moritz understands that literature review is a prime example of this “menial labor.”

Every scientific paper builds on previous research, but identifying and extracting relevant sources from the vast sea of published studies is a daunting task. In some cases, such as systematic reviews, researchers might need to sift through thousands of papers, only to find that many are irrelevant.

Moritz recalls one particular study where “the authors had to examine 3,500 scientific publications, only to discover that many were not applicable. It’s an enormous investment of time to extract a tiny amount of useful information — and it felt like a task tailor-made for AI automation.”

They recognized that modern language models could assist with this burden. For example, when tasked with data extraction, an early experiment with ChatGPT revealed an 11% error rate. While impressive, it fell short of the accuracy researchers require.

“That’s simply not good enough,” Moritz emphasized. “In these knowledge-intensive tasks, even if they seem menial, accuracy is paramount.”

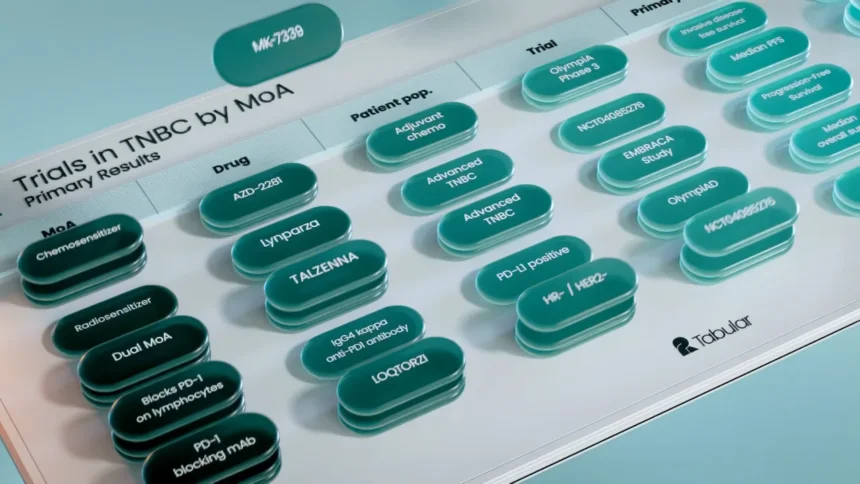

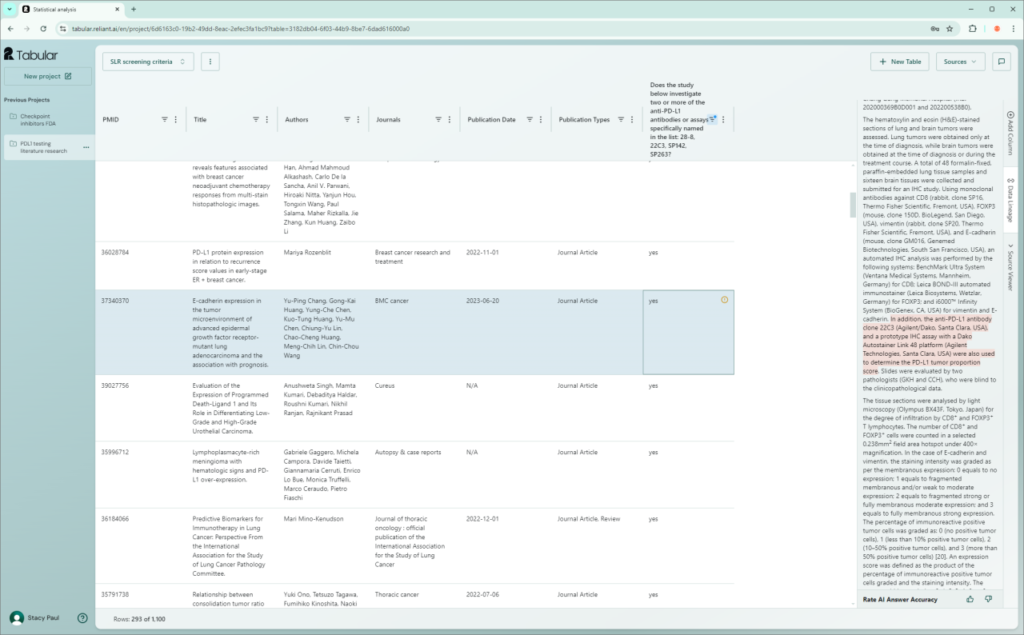

Reliant’s flagship product, Tabular, is built on a language model foundation (LLaMa 3.1), but it’s further enhanced with proprietary techniques that make it significantly more effective. In the aforementioned multi-thousand-study extraction task, Reliant’s Tabular reportedly achieved zero errors.

Here’s how it works: you upload a thousand documents, specify the data you need, and Reliant’s system meticulously extracts that information, regardless of how well-structured or chaotic the sources might be. The extracted data, along with any requested analyses, are presented in a user-friendly interface that allows researchers to explore individual cases in depth.

“Our users need to interact with all the data at once, and we’re developing features that let them edit the data, or jump from the data back to the literature,” Moritz explained. “Our goal is to help users pinpoint where their attention is most needed.”

This focused and precise application of AI, while not as glamorous as some of the more sensational AI applications, holds the potential to accelerate advancements across a variety of highly technical fields. Investors have taken notice, leading to a successful $11.3 million seed round, spearheaded by Tola Capital and Inovia Capital, with angel investor Mike Volpi also contributing.

However, like many AI applications, Reliant’s technology is computationally intensive, which is why the company has opted to purchase its own hardware rather than relying on a la carte rentals from major providers. This decision to go in-house with hardware carries both risks and rewards: the machines are expensive, but they offer the company the opportunity to push the boundaries of what’s possible with dedicated compute resources.

“One of the challenges we’ve encountered is the difficulty of providing a high-quality answer when time is limited,” Moritz explained. For instance, if a scientist asks the system to perform a novel extraction or analysis task on a hundred papers, it can be done quickly, or it can be done well — but not both, unless the system can anticipate user needs and prepare answers, or something close to them, in advance.

“The reality is, many users have similar questions, so we can often preemptively find the answers,” noted Bellemare, the startup’s Chief Science Officer. “We can distill 100 pages of text into something concise, which may not be exactly what the user is looking for, but it provides a solid starting point.”

Consider this analogy: if you were tasked with extracting character names from a thousand novels, would you wait until someone requested the names to begin the work? Or would you identify and catalog names, locations, dates, and relationships ahead of time, knowing that this information would likely be needed? The latter approach makes the most sense — assuming you have the computational power to do so.

This proactive extraction process also allows Reliant’s models to resolve the ambiguities and assumptions inherent in different scientific fields. For example, when one metric “indicates” another, the meaning might differ across domains like pharmaceuticals, pathology, or clinical trials. Additionally, language models tend to produce varying outputs depending on how they’re prompted. Reliant’s mission is to transform ambiguity into certainty — a task that requires deep investment in specific sciences or domains, according to Moritz.

As a company, Reliant’s immediate focus is on proving that its technology is economically viable before pursuing more ambitious goals. “To make meaningful progress, you need a big vision, but you also have to start with something concrete,” Moritz explained. “From a startup survival perspective, we’re focusing on for-profit companies because they provide the revenue needed to cover our GPU costs. We’re not selling this at a loss.”

Despite the competitive landscape, which includes giants like OpenAI and Anthropic, as well as implementation partners like Cohere and Scale, Reliant remains optimistic. “We’re building on a solid foundation — any improvements in our tech stack benefit us,” Bellemare said. “The LLM is just one of the many large machine learning models in our system — the others are entirely proprietary, developed from scratch using data exclusive to us.”

The transformation of the biotech and research industry into one driven by AI is still in its early stages and may continue to evolve unevenly. However, Reliant seems to have carved out a strong niche from which to grow.

“If you’re content with a 95% solution and are willing to occasionally apologize to your customers, that’s fine,” Moritz concluded. “But we’re focused on scenarios where precision and recall are crucial, and where errors can have significant consequences. For us, that’s enough — we’re happy to let others handle the rest.”