How do machine learning models achieve what they do? Are they actually “thinking” or “reasoning” in ways we humans understand, or is something entirely different going on? This is as much a philosophical question as it is a technical one. However, a new study making waves suggests that, for now, the answer is a clear “no.”

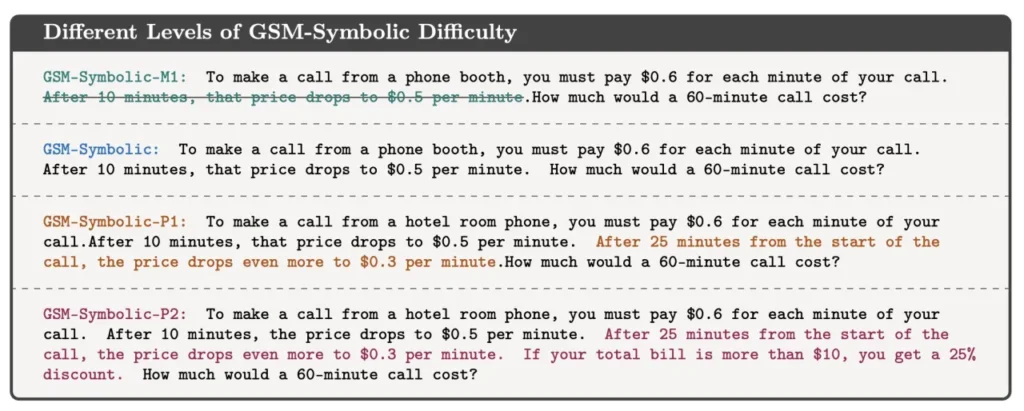

A team of AI researchers at Apple recently released a paper titled Understanding the Limitations of Mathematical Reasoning in Large Language Models. While the details delve deep into topics like symbolic learning and pattern recognition, the key takeaway from their research is surprisingly straightforward.

Let’s break it down.

A Simple Math Problem – And Where It All Goes Wrong

Consider this basic math problem:

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picks double the number of kiwis he did on Friday. How many kiwis does Oliver have?

The answer is simple: 44 + 58 + (44 * 2) = 190. Even though large language models (LLMs) tend to be hit-or-miss with arithmetic, they can usually handle a problem like this. But what happens if we add a small, seemingly irrelevant detail?

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picks double the number of kiwis he did on Friday, but five of them were a bit smaller than average. How many kiwis does Oliver have?

It’s the same math problem, right? Any grade-schooler would easily reason that a small kiwi is still a kiwi, so the answer remains unchanged. But for state-of-the-art LLMs, that extra piece of random information is enough to cause a major breakdown.

Take GPT-o1-mini’s response to the modified problem:

“… on Sunday, 5 of these kiwis were smaller than average. We need to subtract them from the Sunday total: 88 (Sunday’s kiwis) – 5 (smaller kiwis) = 83 kiwis.”

Clearly, the model is confused. It makes the bizarre assumption that the smaller kiwis should be excluded from the total. This is just one example among hundreds tested by the researchers. In nearly all cases, when small modifications were introduced, the models’ accuracy dropped dramatically.

Why Do LLMs Fail on These Simple Adjustments?

If these models “understood” the problem, why would such trivial details throw them off so easily? The researchers argue that this consistent failure suggests the models don’t truly understand the problem at all. While their training data allows them to generate correct answers in straightforward cases, they falter when actual reasoning—such as deciding whether small kiwis should count—becomes necessary.

According to the paper:

“We investigate the fragility of mathematical reasoning in these models and demonstrate that their performance significantly deteriorates as the number of clauses in a question increases. We hypothesize that this decline is due to the fact that current LLMs are not capable of genuine logical reasoning; instead, they attempt to replicate the reasoning steps observed in their training data.”

This finding aligns with what we already know about LLMs’ proficiency with language. For example, they can easily predict that the phrase “I love you” will likely be followed by “I love you, too,” but that doesn’t mean they feel love. Similarly, they can follow complex reasoning paths they’ve encountered before, but these paths can be broken by even the smallest deviation, indicating that what they’re doing is more akin to pattern-matching than actual reasoning.

The Role of Prompt Engineering – A Fix or a Band-Aid?

Mehrdad Farajtabar, one of the co-authors of the paper, broke down its key points in a Twitter thread, garnering attention from the AI research community. Notably, some researchers from OpenAI praised the study but disagreed with its conclusions. One OpenAI researcher suggested that with a bit of “prompt engineering”—carefully crafting the input to guide the model—many of these failure cases could be corrected.

While Farajtabar graciously acknowledged the point, he countered by explaining that while prompt engineering may help with simple tweaks, the more complex and distracting the additional information, the more context the model would need to avoid tripping up. In essence, you’d need to keep feeding the model increasingly specific instructions just to solve problems that any child could handle intuitively.

So, Can LLMs Really “Reason”? Or Are They Just Really Good at Guessing?

This debate begs the larger question: Are LLMs truly reasoning, or are they simply imitating reasoning based on patterns they’ve observed in their training data? The answer is elusive. As the researchers point out, concepts like “reasoning” and “understanding” aren’t well-defined in the context of artificial intelligence. What we do know is that today’s LLMs struggle with even basic reasoning tasks when irrelevant or distracting information is introduced.

This has broad implications for how we understand and use AI. For example, while LLMs excel at tasks like language translation, summarization, or even generating code, their limitations become glaringly apparent when deeper, human-like reasoning is required. And while engineers can tweak prompts to improve outcomes, this workaround doesn’t address the root of the issue: these models lack a true understanding of the tasks they’re performing.

The Future of AI Reasoning: Caution and Curiosity

Does this mean that LLMs can’t ever reason? Not necessarily. AI research is advancing at a breakneck pace, and what seems impossible today may well be achievable tomorrow. Perhaps LLMs are reasoning, but in a way we don’t yet fully comprehend or know how to guide.

This uncertainty presents both a fascinating frontier for researchers and a cautionary tale for everyday users. As AI becomes more integrated into our lives—from customer service bots to personal assistants to creative tools—the question of what these models can really do becomes more than just academic.

So, the next time someone asks if AI can “think,” it might be worth pausing before answering. As it stands, the technology is powerful but far from perfect. And when it comes to reasoning, it still has a lot to learn—just like the rest of us.

By framing AI’s limitations in this way, we can better understand both the potential and the pitfalls of this evolving technology. In the meantime, stay curious, keep questioning, and remember: just because an AI can talk like us, doesn’t mean it thinks like us.