How fake music targets real artists.

The first sign something was off came through my Spotify Release Radar. A new HEALTH album popped up, but something about it felt wrong. The cover art was off-brand for the band, and when I played it, the music didn’t sound like HEALTH at all. It turned out to be one of three fake albums uploaded to their artist page that weekend.

The band’s account on X made light of the situation, joking about the imposters. The fraudulent albums were eventually removed, and I chalked it up as a one-off mishap. But the following weekend, it happened again—this time to Annie. The fake album appeared plausible at first since Annie had recently dropped a single, “The Sky Is Blue.” However, when I clicked in, the tracklist didn’t match up. I played it and was greeted by a mishmash of bird sounds and New Age instrumentals. It wasn’t Annie.

“That was upsetting to me because if you have ears, you can definitely hear it’s not our music,” one artist later told me.

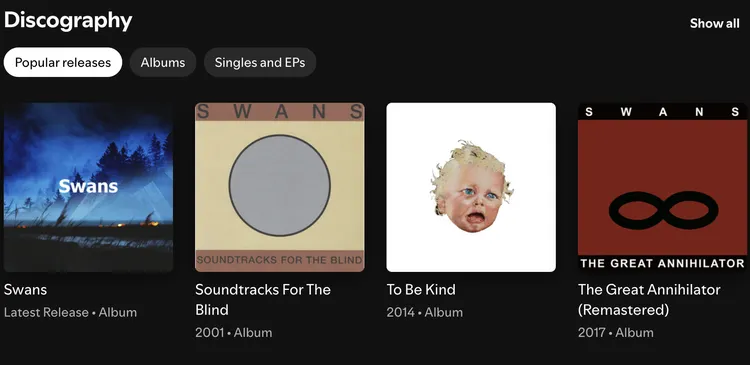

It turned out Annie and HEALTH weren’t alone. This was part of a broader issue affecting countless musicians on Spotify, many of whom discovered their pages hijacked by AI-generated slop. Metalcore bands like Caliban, Northlane, and Silent Planet were among the early targets, as were a suspicious number of artists with single-word names like Swans, Asia, and Gong. Some of these fakes disappeared within days, while others lingered for weeks or months, unchecked and unchallenged.

The Broken Honor System of Music Distribution

To understand how this happens, you need to grasp how music distribution works. Unlike managing your Facebook page, artists don’t upload albums directly to Spotify. Instead, they go through distributors that handle metadata, licensing, and royalty payments. These distributors upload vast amounts of music in bulk, trusting the metadata to ensure everything ends up in the right place. But this process largely operates on the honor system.

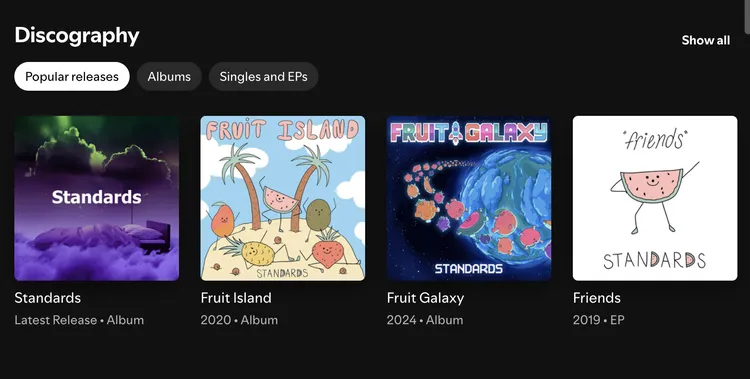

Fraudsters exploit this loophole by falsely claiming to represent legitimate artists. A distributor might unknowingly upload fake content, which Spotify then accepts at face value. For instance, when Standards, an instrumental math-rock band, saw a fake album appear on their page in late September, their lead guitarist Marcos Mena assumed it was a simple mistake. But when he contacted Spotify, he received a disappointing automated response:

“It looks like the content is mapped correctly to the artist’s page. If you require further assistance, please contact your music provider. Please do not reply to this message.”

The fake album remained on Standards’ page for nearly two months, despite Mena’s efforts to have it removed. It wasn’t until early November—long after the band’s real 2023 album had been released—that someone finally took it down. “That was upsetting to me,” Mena said. “It’s definitely a bummer because we did have a new album come out this year, and I feel like [the fake album] is detracting from that.”

Fraud as a Business Model

Why would anyone go to the trouble of uploading fake albums? The answer, unsurprisingly, is money. Spotify pays royalties based on streams, with payouts going through the distributor. When someone streams a fake album, the royalties are funneled not to the artist but to whoever uploaded the counterfeit.

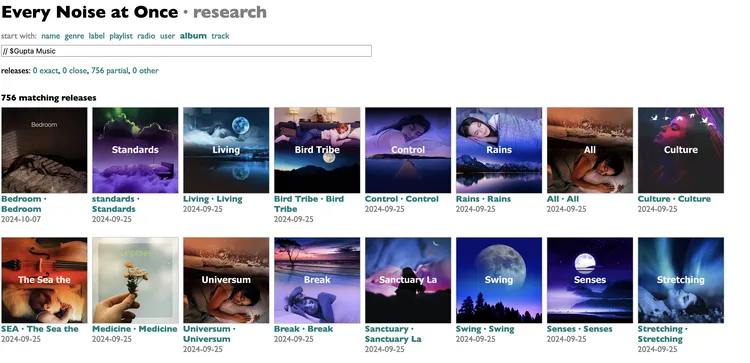

Consider the case of “Gupta Music,” the supposed label behind the fake Standards album. A search for Gupta Music on platforms like Every Noise at Once reveals over 700 releases, many with AI-generated cover art and generic single-word band names like Rany, Bedroom, and Culture. Each of these albums has the potential to generate royalties, which—when aggregated across hundreds or thousands of fraudulent tracks—can add up to significant payouts.

It’s not just Spotify, either. This type of scam plays out across more than 100 streaming platforms worldwide. And while Spotify recently cut ties with Ameritz Music, the licensor responsible for this wave of fakes, the problem is far from solved.

The AI Factor: Accelerating the Problem

AI tools have turbocharged these scams, making it easier than ever to produce low-effort content. Fraudsters no longer need to remix or plagiarize existing tracks—they can generate entirely new songs with minimal effort. They also exploit typos, uploading tracks under names like Kendrik Laamar, Arriana Gramde, and Llady Gaga to siphon off streams from careless listeners.

Earlier this year, a Danish man was sentenced to 18 months in prison for using bots to artificially inflate streams on Spotify, earning him $300,000 in royalties. Another fraudster, Michael Smith, allegedly made $10 million over seven years by using AI to generate a massive catalog of fake songs, which he then streamed using bots.

“People upload massive amounts of albums that are intended to be streaming fraud albums,” says Andrew Batey, CEO of Beatdapp, a company focused on preventing streaming fraud. He estimates that $2–3 billion is stolen annually from legitimate artists due to schemes like these.

A System Struggling to Keep Up

Spotify claims to “invest heavily in automated and manual reviews” to combat fraud. However, the sheer volume of uploads and the complexity of the scam make it a challenging battle. Fraudsters often use multiple fake labels and distributors to obscure their tracks. Some even hack real users’ accounts to disguise fraudulent streams among legitimate activity.

Meanwhile, the distribution industry faces its own challenges. Distributors make money by taking a cut of artists’ royalties, so they have little financial incentive to crack down on fraud unless forced. Lawsuits, like the one Universal Music Group (UMG) recently filed against Believe and TuneCore, might change that calculus. UMG’s lawsuit alleges that Believe knowingly distributed infringing content, including tracks attributed to fake artists with names designed to trick users.

The Bigger Picture: A Crisis of Trust

For Spotify, the rise of AI-generated fraud poses an existential threat. The platform’s value lies in its ability to connect users with the music they love. If listeners regularly encounter garbage masquerading as their favorite artists, trust in the platform will erode.

AI is just the latest accelerant in a problem that has existed for years, but its impact is undeniable. Fraudsters now have the tools to create fake songs at scale, flooding platforms like Spotify with low-quality content. The result is a degraded user experience, not unlike what’s happened to other platforms like Facebook, Instagram, and even Google, as they’ve become overrun with spam and low-value AI content.

A Way Forward?

Fixing this problem requires action at multiple levels. Distributors need better vetting processes, and streaming platforms like Spotify need to implement more robust safeguards. For instance, Spotify could flag uploads from unfamiliar distributors or labels that don’t match an artist’s existing catalog. Improved AI detection tools could also help identify when a new release doesn’t sound like the artist’s established style.

But the industry faces a delicate balancing act. Over-policing uploads risks alienating legitimate artists, especially indie musicians who often operate outside the mainstream system. Yet failing to act could see the platform further exploited by fraudsters, ultimately driving away listeners and artists alike.

As UMG’s lawsuit against Believe unfolds in the coming months, its outcome could reshape how distributors handle uploads and enforce quality control. For now, though, Spotify remains a battleground where legitimate musicians are fighting to reclaim their pages—and their royalties—from the rising tide of AI slop.